Methods to Make Your Try Chatgpt Look Amazing In 7 Days

페이지 정보

작성자 Tracey 댓글 0건 조회 13회 작성일 25-02-12 07:53본문

If they’ve never completed design work, they may put collectively a visible prototype. On this part, we are going to highlight some of these key design selections. The actions described are passive and do not highlight the candidate's initiative or impact. Its low latency and high-performance characteristics guarantee immediate message delivery, which is important for real-time GenAI purposes the place delays can considerably impact person experience and system efficacy. This ensures that totally different elements of the AI system receive precisely the data they need, after they want it, without pointless duplication or delays. This integration ensures that as new data flows by KubeMQ, it is seamlessly stored in FalkorDB, making it readily available for retrieval operations without introducing latency or bottlenecks. Plus, the chat international edge network provides a low latency chat experience and a 99.999% uptime guarantee. This function considerably reduces latency by holding the information in RAM, near the place it's processed.

If they’ve never completed design work, they may put collectively a visible prototype. On this part, we are going to highlight some of these key design selections. The actions described are passive and do not highlight the candidate's initiative or impact. Its low latency and high-performance characteristics guarantee immediate message delivery, which is important for real-time GenAI purposes the place delays can considerably impact person experience and system efficacy. This ensures that totally different elements of the AI system receive precisely the data they need, after they want it, without pointless duplication or delays. This integration ensures that as new data flows by KubeMQ, it is seamlessly stored in FalkorDB, making it readily available for retrieval operations without introducing latency or bottlenecks. Plus, the chat international edge network provides a low latency chat experience and a 99.999% uptime guarantee. This function considerably reduces latency by holding the information in RAM, near the place it's processed.

However if you want to define more partitions, you may allocate more space to the partition table (currently only gdisk is known to support this feature). I did not need to over engineer the deployment - I wanted one thing quick and simple. Retrieval: Fetching related documents or data from a dynamic information base, reminiscent of FalkorDB, which ensures quick and efficient entry to the most recent and pertinent information. This strategy ensures that the mannequin's solutions are grounded in probably the most relevant and up-to-date info obtainable in our documentation. The model's output can also observe and profile individuals by accumulating info from a prompt and associating this information with the user's phone number and e mail. 5. Prompt Creation: The selected chunks, together with the unique question, are formatted into a prompt for the LLM. This approach lets us feed the LLM current information that wasn't a part of its unique training, leading to extra accurate and up-to-date answers.

However if you want to define more partitions, you may allocate more space to the partition table (currently only gdisk is known to support this feature). I did not need to over engineer the deployment - I wanted one thing quick and simple. Retrieval: Fetching related documents or data from a dynamic information base, reminiscent of FalkorDB, which ensures quick and efficient entry to the most recent and pertinent information. This strategy ensures that the mannequin's solutions are grounded in probably the most relevant and up-to-date info obtainable in our documentation. The model's output can also observe and profile individuals by accumulating info from a prompt and associating this information with the user's phone number and e mail. 5. Prompt Creation: The selected chunks, together with the unique question, are formatted into a prompt for the LLM. This approach lets us feed the LLM current information that wasn't a part of its unique training, leading to extra accurate and up-to-date answers.

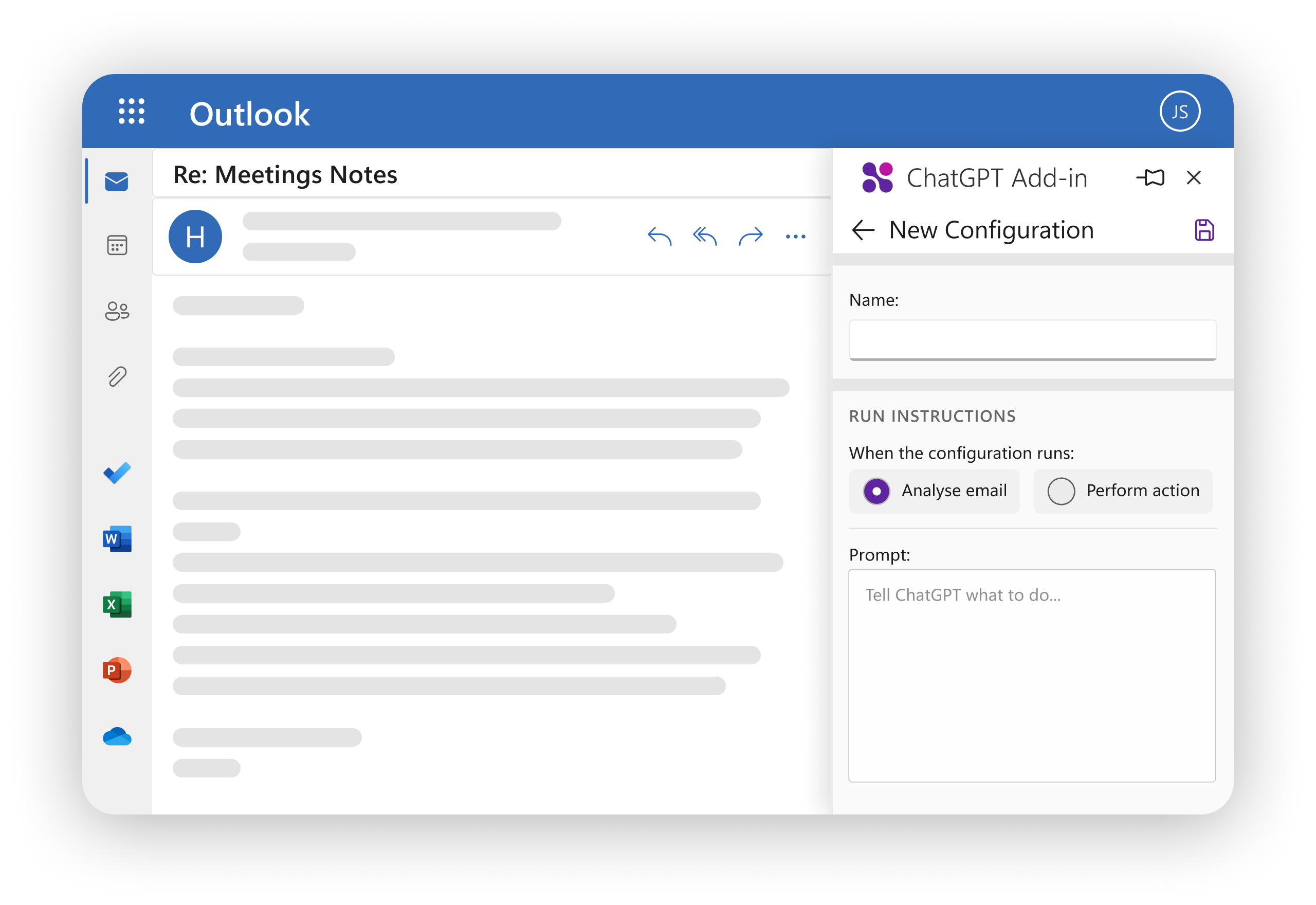

RAG is a paradigm that enhances generative AI models by integrating a retrieval mechanism, permitting fashions to entry exterior data bases during inference. KubeMQ, a strong message broker, emerges as an answer to streamline the routing of multiple RAG processes, making certain efficient data dealing with in GenAI functions. It allows us to continually refine our implementation, making certain we deliver the very best person experience whereas managing assets effectively. What’s more, being part of this system provides students with beneficial resources and training to make sure that they've every little thing they need to face their challenges, obtain their goals, and better serve their neighborhood. While we stay dedicated to offering steering and chat gtp free fostering community in Discord, support through this channel is limited by personnel availability. In 2008 the company experienced a double-digit increase in conversions by relaunching their online chat assist. You can start a personal chat immediately with random girls online. 1. Query Reformulation: We first mix the person's query with the present user’s chat history from that same session to create a new, stand-alone query.

For our current dataset of about 150 documents, this in-reminiscence approach offers very fast retrieval times. Future Optimizations: As our dataset grows and we potentially move to cloud storage, we're already considering optimizations. As prompt engineering continues to evolve, generative AI will undoubtedly play a central role in shaping the way forward for human-laptop interactions and NLP purposes. 2. Document Retrieval and Prompt Engineering: The reformulated query is used to retrieve related documents from our RAG database. For example, when a user submits a prompt to GPT-3, it should access all 175 billion of its parameters to ship an answer. In situations comparable to IoT networks, social media platforms, or actual-time analytics programs, new knowledge is incessantly produced, and AI models should adapt swiftly to include this data. KubeMQ manages high-throughput messaging scenarios by offering a scalable and robust infrastructure for efficient data routing between services. KubeMQ is scalable, supporting horizontal scaling to accommodate elevated load seamlessly. Additionally, KubeMQ offers message persistence and fault tolerance.

If you loved this article therefore you would like to collect more info regarding try chatgp generously visit our own web-site.

- 이전글What’s The Best Cat Food For Egyptian Maus? 25.02.12

- 다음글How To Avoid Wasting Money With Try Gpt Chat? 25.02.12

댓글목록

등록된 댓글이 없습니다.